Brilliant Labs Frame Are AI Glasses, And I Think This Is The Future Of The iPhone

The Apple Vision Pro has been the talk of the town in the past few days, as regular customers have started using the spatial computer. Unfortunately, for this Vision Pro fan, the device isn't available in Europe, so I'll have to wait until I get my hands on it.

I think the Vision Pro is incredibly important for this moment in time in the history of computers. Apple is preparing for the post-iPhone era, a world where AR glasses will initially supplement the iPhone to then replace it.

We're also witnessing the dawn of AI, with companies already envisioning non-phone AI-first devices. Like the Humane Ai Pin, the Rabbit r1, and the brand new Brilliant Labs Frame glasses.

The Frame, available for preorder for a tenth of the Vision Pro cost, is my favorite of the three. It brings an AR experience of personal AI, which is what I'll want from the iPhone down the road, once Apple's GPTs and the actual AR glasses are ready. Until then, startups like Brilliant Labs should be on your radar.

The Frame glasses

The most obvious thing about the Frame to me is that it fixes a problem that the Ai Pin, and the r1 (to some extent) have. The Humane device lacks a way to display things. A screen would do it, like the Rabbit r1's. But you still have to deal with another device to get the AI features you want.

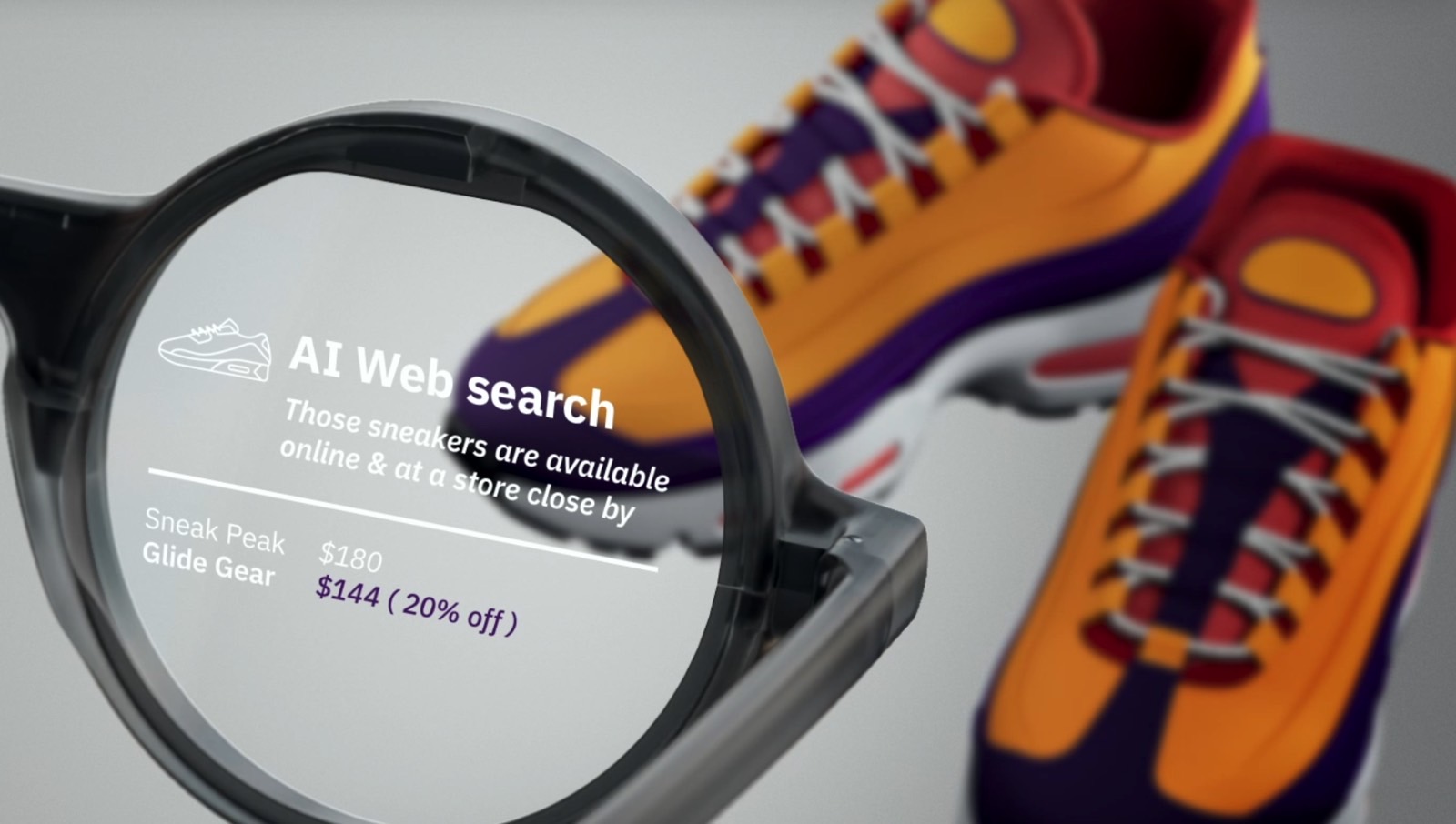

Brilliant Labs put AR to good use for that, projecting the AI features in front of your eyes.

The Frame looks like the pair of glasses Steve Jobs would wear, and it's probably deliberate. Again, Brilliant Labs is a startup from former Apple employees. Apple has been a huge influence on them.

But unlike the spectacles Jobs used to wear, the Frame are smart glasses. They might wear just 39g, but they should deliver all-day battery life for AI features. Called Noa, the AI that powers the Frame is a combination of several AI models out there.

The AI features

On top of that, there's a front-facing camera that lets Noa see the world together with you so it can answer your questions. Noa can translate foreign languages, it can offer explanations about the various things you might see during the day and require more information, and it can even generate images that are only for your eyes to see.

Noa will support multimodal AI tech with OpenAI's GPT4 powering some of those features. OpenAI tech lets the AI see the world, and Whisper tech will let it translate what you see and hear in real time. Perplexity, which also powers search on the Rabbit r1, will let you search the web for information about what you might see. Stability AI's Stable Diffusion will let the Frame generate imagery.

Noa is available to download on iPhone and Android, and this hints at how Frame will work. You'll connect the glasses to the handset to get this AR AI computing experience.

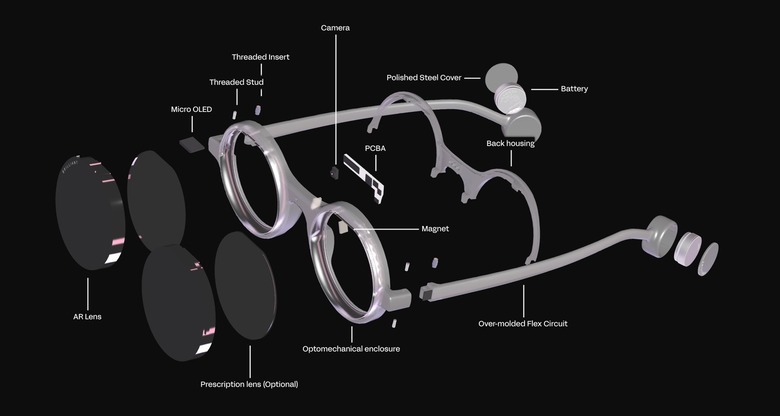

As for how the Frame will display the information, those lenses are AR lenses, with a micro OLED panel sitting right at the top of them. The tiny screen is bonded to the geometric prism and displays images at a 20-degree diagonal field of view. Frame also supports prescription lenses that can be added to the glasses.

The battery sits in those cylindrical containers right at the end of the frame. You recharge it via a "nose" that goes between the lenses, as seen in one of these images. As for the actual frame of the... Frame, we're looking at nylon plastic.

Priced at $349, the Frame will ship worldwide in mid-April. Brilliant Labs says that access to AI will be free initially, subject to a daily cap. after that, a paid subscription will be required to get those AI features working.

The Frame needs a smartphone to work.

The software is open-source, something fans of Brilliant Labs' other popular product might be familiar with. That's the Monocle AR glasses that preceded the Frame. AI enthusiasts will be able to customize their experience, with the company offering access to code and documentation.

Like with other early AI products, I do have questions about the security and privacy of those AI interactions. Brilliant Labs doesn't address privacy explicitly on its website.

But it's clear that all AI tasks do not happen on-device, since the Frame lacks a processor, unlike the Ai Pin and the r1. The Frame doesn't do any data processing, and it doesn't have an operating system. The Noa app is the brains of the Frame. Therefore, the iPhone or Android device will make the Frame work. That explains the incredibly light build of the AI glasses.

Also, while the Frame's AR display is the product's obvious advantage over the Humane Ai Pin and the Rabbit r1, it's unclear whether Noa can handle more personal AI experiences like the ones Humane and Rabbit propose. Well, let me rephrase that.

Not even the Ai Pin can match the r1's ability to interact with apps. But Humane's AI hooks into various aspects of the user's digital life. It can capture photos and videos and access data from email and messages.

The AR glasses I want from Apple

Since I mentioned the future of the iPhone, I'd want Apple's AR glasses to perform a variety of tasks, a mix and match between what the Ai Pin, r1, and Frame can offer.

I'd like such a device to be able to understand the world like the Frame but also be a reliable assistant that can access personal data like the Ai Pin and perfrom in-app actions like the R1. That's too much to ask for this point in time.

But the fact that someone made the Frame with the technology available today is certainly inspiring.