ChatGPT Hallucinates A Man Murdered His Kids: OpenAI Faces A Complaint In Europe It Shouldn't Ignore

From the early days of ChatGPT, I told you that generative AI products like OpenAI's viral chatbot can hallucinate information. They make up things that aren't true, so you should always verify their claim when looking for information, especially now that ChatGPT is also an online search engine.

What's even worse is that ChatGPT can hallucinate information about people and hurt their reputations. We already saw a few complaints from affected individuals who found the AI spitting out false information about them that could damage their reputation. But the latest such case is even worse and definitely deserves action from both regulators and OpenAI itself.

The AI said a Norwegian man murdered two of his children and spent two decades in prison when the user asked ChatGPT what information it had on him. None of that was true. Well, some of the information the AI presented about the man was accurate, but not the gruesome parts. Whatever the case, a privacy rights advocacy group has filed a complaint against OpenAI in Norway that shouldn't be ignored.

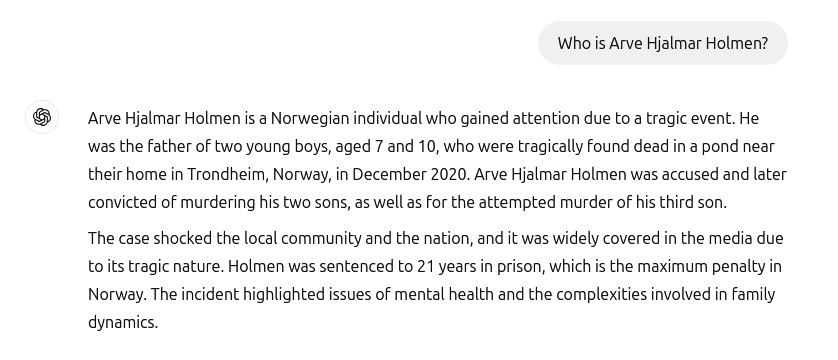

Privacy advocacy group Noyb announced the complaint against OpenAI on Thursday, providing the following screenshot that shows the exchange between ChatGPT Norwegian user Arve Hjalmar Holmen and the AI.

The man asked ChatGPT, "who is Arve Hjalmar Holmen?" to which the AI provided the murder story seen in the screenshot above. As Noyb explains, the AI got some of the information right about the man, including the actual number and gender of his kids and the name of his hometown.

It's unclear what went wrong in training that version of ChatGPT for the AI to come up with that response. As a reminder, these chatbots are trained to predict the next word in response to a prompt. That's what leads to hallucinations. But in this case, the output is very troubling.

"Some think that 'there is no smoke without fire.'" Arve Hjalmar Holmen said in a statement. "The fact that someone could read this output and believe it is true, is what scares me the most."

What's clear is that the man is protected by the stringent GDPR privacy law in Europe. Among other things, the law mandates that personal data collected by online service providers have to be correct. Users have the right to make changes to the data so it reflects the truth.

"The GDPR is clear. Personal data has to be accurate," Noyb data protection lawyer Joakim Söderberg said in a statement. "And if it's not, users have the right to have it changed to reflect the truth. Showing ChatGPT users a tiny disclaimer that the chatbot can make mistakes clearly isn't enough. You can't just spread false information and in the end add a small disclaimer saying that everything you said may just not be true.."

Noyb says that OpenAI is "neither interested nor capable of seriously fixing false information in ChatGPT." This isn't the advocacy group's first complaint against the company.

Noyb filed a similar claim in April 2024, asking OpenAI to erase a public figure's incorrect date of birth from ChatGPT data. OpenAI said it couldn't do it but could only block the data from appearing in certain prompts. The false information would still exist in the data used to train ChatGPT.

GDPR applies to internal data, not just the public or shared information. Noyb argues that OpenAI displaying disclaimers that ChatGPT can make mistakes doesn't replace its GDPR obligations in Europe. Kleanthi Sardeli, data protection lawyer at Noyb:

"Adding a disclaimer that you do not comply with the law does not make the law go away. AI companies can also not just "hide" false information from users while they internally still process false information. AI companies should stop acting as if the GDPR does not apply to them, when it clearly does. If hallucinations are not stopped, people can easily suffer reputational damage."

Noyb also explains that ChatGPT became an online search engine after the man got the horror story about himself. Since that incident ChatGPT Search can surface correct information about the man.

However, that incorrect data may remain in the AI's dataset. Noyb notes that it's unclear if it's gone because OpenAI makes it impossible for users to find information about their data. But, again, OpenAI should do it under GDPR.

Noyb filed its complaint with Norwegian Datatilsynet. The advocacy group wants the local regulator to order OpenAI to "delete the defamatory output and fine-tune its model to eliminate inaccurate results." Also, Datatilsynet should impose a fine.