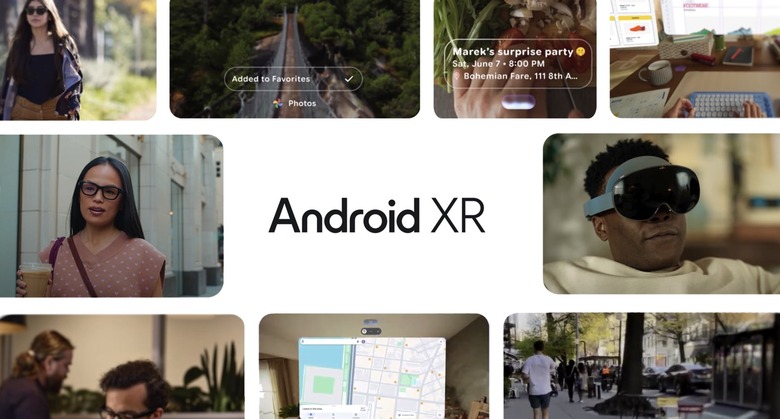

Android XR Smart Glasses With Gemini Are Coming Soon, Here's What They Can Do

When Google announced the Android XR platform in December, it felt a little rushed, as if Google was trying to prevent OpenAI from getting all the AI attention. OpenAI was in the middle of a two-week-long series of ChatGPT live streams, some more important than others. It was in December that Google also unveiled Gemini 2.5 Pro and its first AI agents.

Fast-forward to mid-May, and we've had many Gemini 2.5 Pro developments this year. But we've hardly seen any Android XR news. Neither Google nor Samsung has addressed the Project Moohan headset shown in December, and we haven't gotten any updates on Android XR smart glasses from Google, whether for AR or AI-only experiences.

Google did say last week, during its Android 16 event ahead of I/O 2025, that Gemini will become the default assistant on many connected devices, like cars, watches, and TVs. Google also confirmed that Gemini will power Android XR gadgets, which are built around Gemini Live.

Now that Google I/O 2025 is underway in California, we finally have more details about Android XR hardware. Google plans to build stylish smart glasses with select partners. Samsung will also produce smart glasses, alongside the Project Moohan spatial computer.

While there are no release dates yet for the upcoming Android XR hardware, Google did finally showcase more Android XR features that are coming to these devices.

Google explained that Android XR glasses will work in tandem with your phone to deliver Gemini Live functionality. The AI will see from your perspective and help answer your questions. You won't need to pull out your phone to interact with the AI.

The Android XR glasses will include a camera, microphones, speakers, and an optional in-lens display. That display would allow the glasses to show AR visuals, as seen in the following screenshots.

Google said it's aware these AR/AI glasses are only useful if you wear them all day, so it's teaming up with "innovative eyewear brands, including Gentle Monster and Warby Parker," to make stylish glasses with Android XR.

Google didn't share a release date or pricing, but it did show how Gemini will assist when using Android XR glasses. Developers will be able to create apps for the platform later this year.

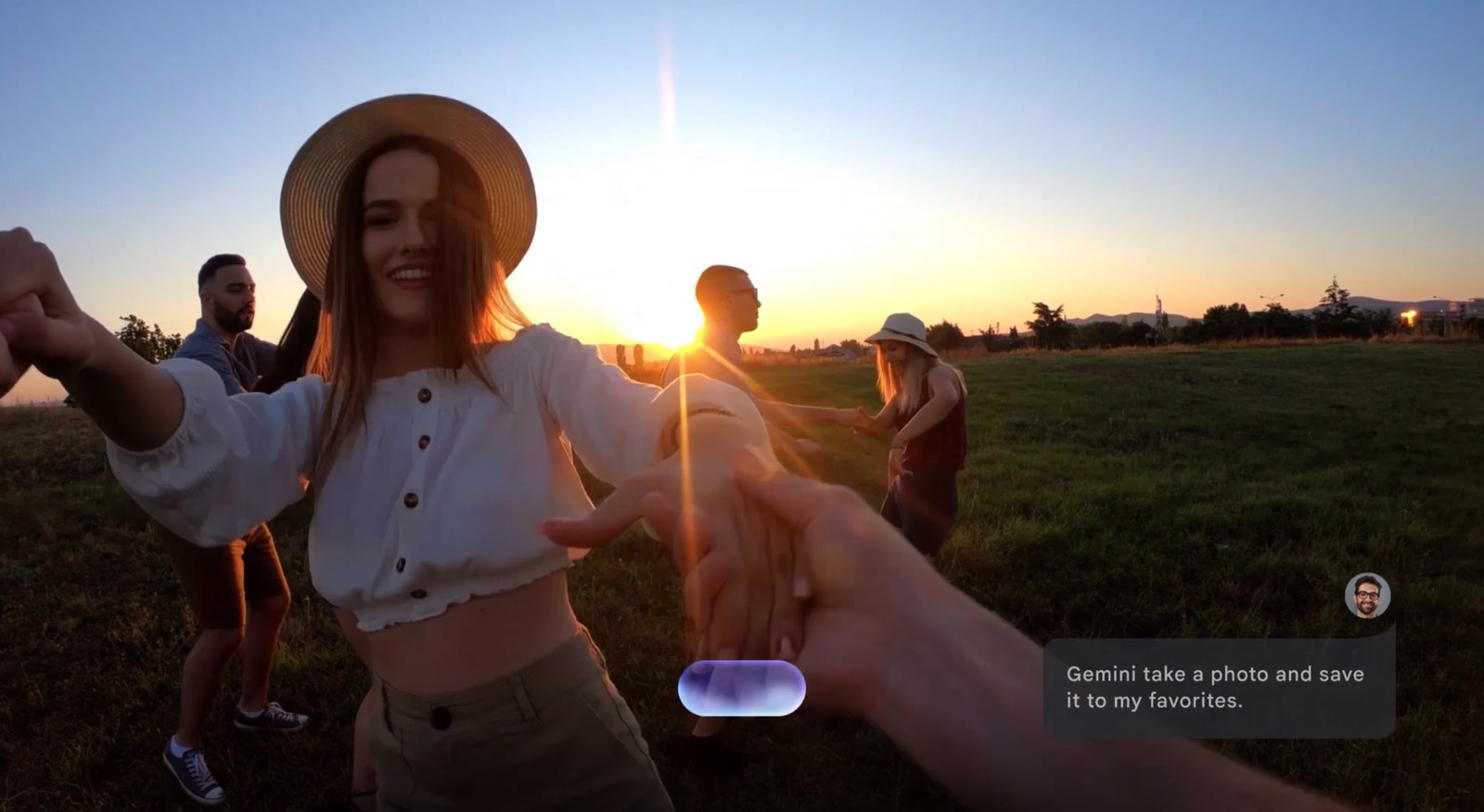

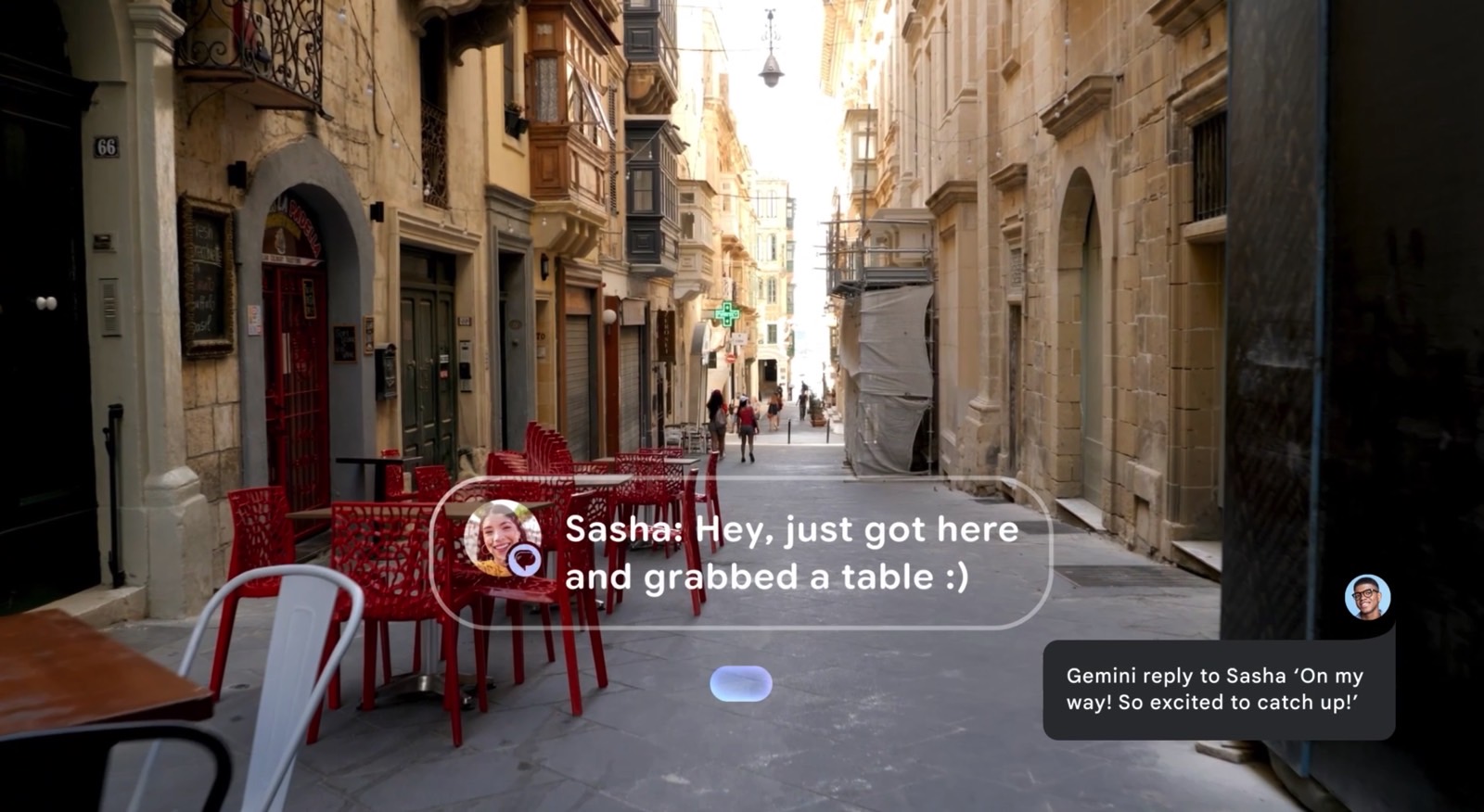

With Android XR glasses, you'll be able to talk to Gemini via voice, and the AI assistant will connect to apps and perform various actions.

For instance, the screenshots above show an interaction with Gemini while cooking. The user saves an event to Calendar and adds a to-do item to Tasks using voice commands.

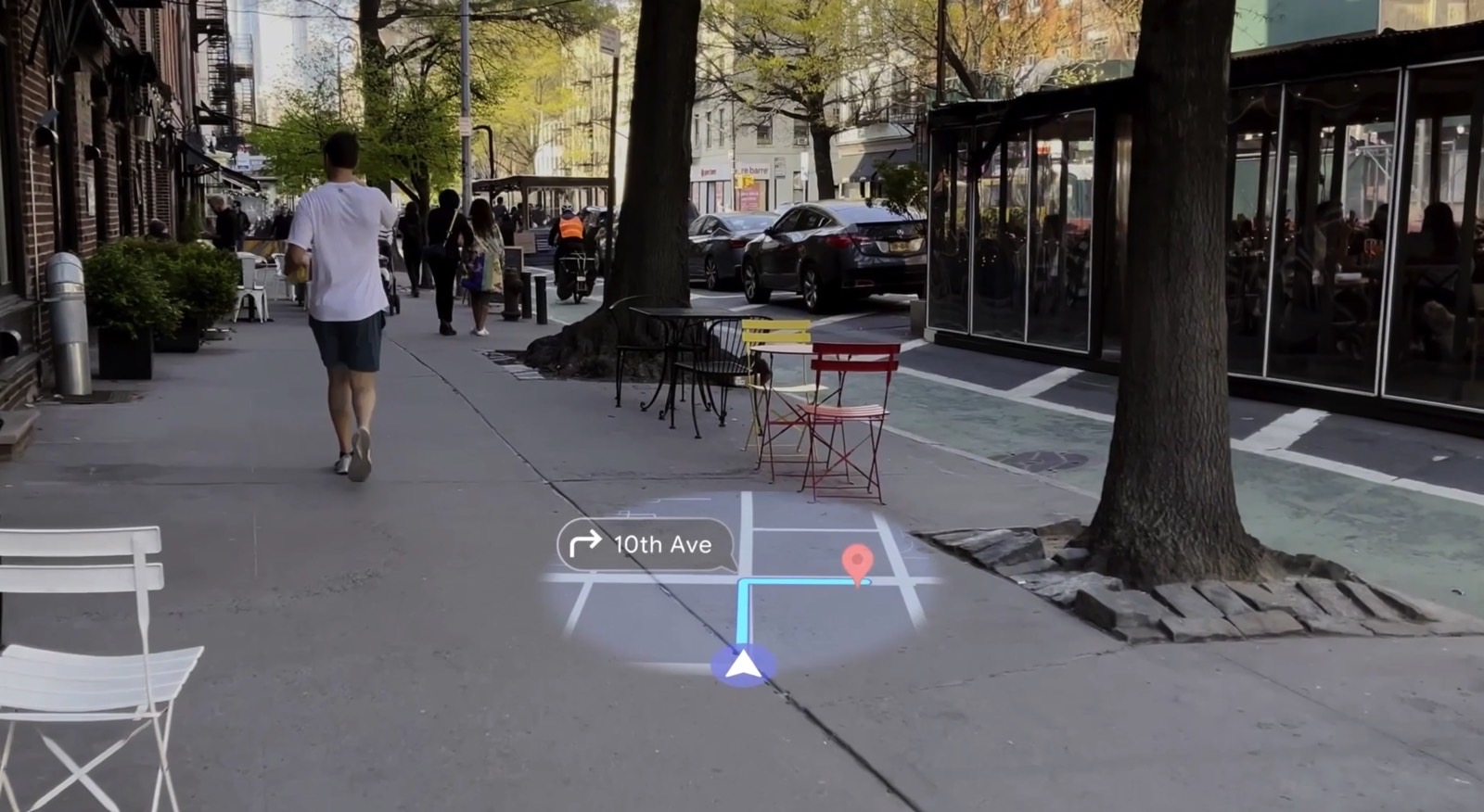

I'm personally more interested in using AI-powered glasses while out and about. Google demonstrated a feature where you ask Gemini for nearby restaurants, and the AI performs a search and then provides navigation through Google Maps.

If the Android XR glasses support AR displays, you'll see navigation guidance appear right in front of your eyes.

Texting friends or taking photos with Gemini would be just as simple, and both experiences offer an augmented reality layer if the smart glasses include in-lens displays.

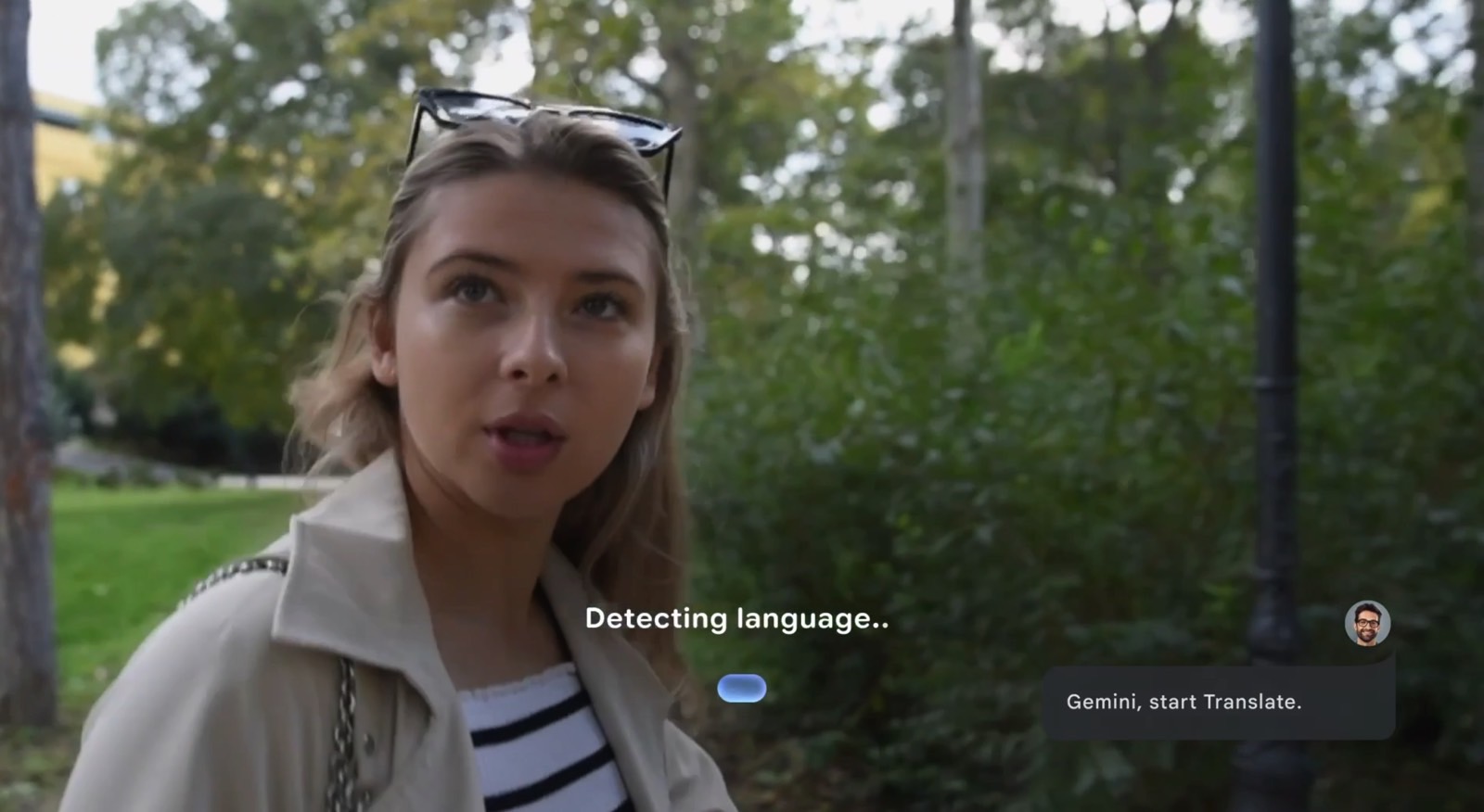

Google also demonstrated how Gemini can translate languages in real-time for Android XR users, another compelling use for this tech.

That said, it's still too early to get excited about Android XR hardware without more details. Google isn't ready to share specs or pricing just yet.

Still, Android XR could give Google a strong edge, especially since competitors like OpenAI and Apple aren't offering this kind of hardware anytime soon.