AI-Generated Reviews Are Here, But You Shouldn't Trust Them

Trudging through the reviews of various Amazon products can be tedious, especially as you look for certain issues. One way that Amazon and others are hoping to make this navigation easier is by utilizing generative AI reviews to summarize many of the pros and cons seen across multiple reviews. While the idea might seem ingenious at first glance, there are far too many possible problems to trust these AI-generated reviews right now.

While generative AI has seen some huge successes, most notably in things like ChatGPT and Midjourney, both of which allow you to generate content based on prompts, there have also been a ton of problems with those successful avenues. For starters, not inputting a strong enough prompt in Midjourney can lead to several side effects, including some deranged-looking content.

The same is similar for ChatGPT, where despite OpenAI's best efforts, you're still just as likely to find made-up information in response to a prompt as you are to find ripped content somewhere else on the internet. When they do work, though, these generative AI systems can be rather helpful, though not to the point of "replacing the workforce," as some have said in attempts to fearmonger.

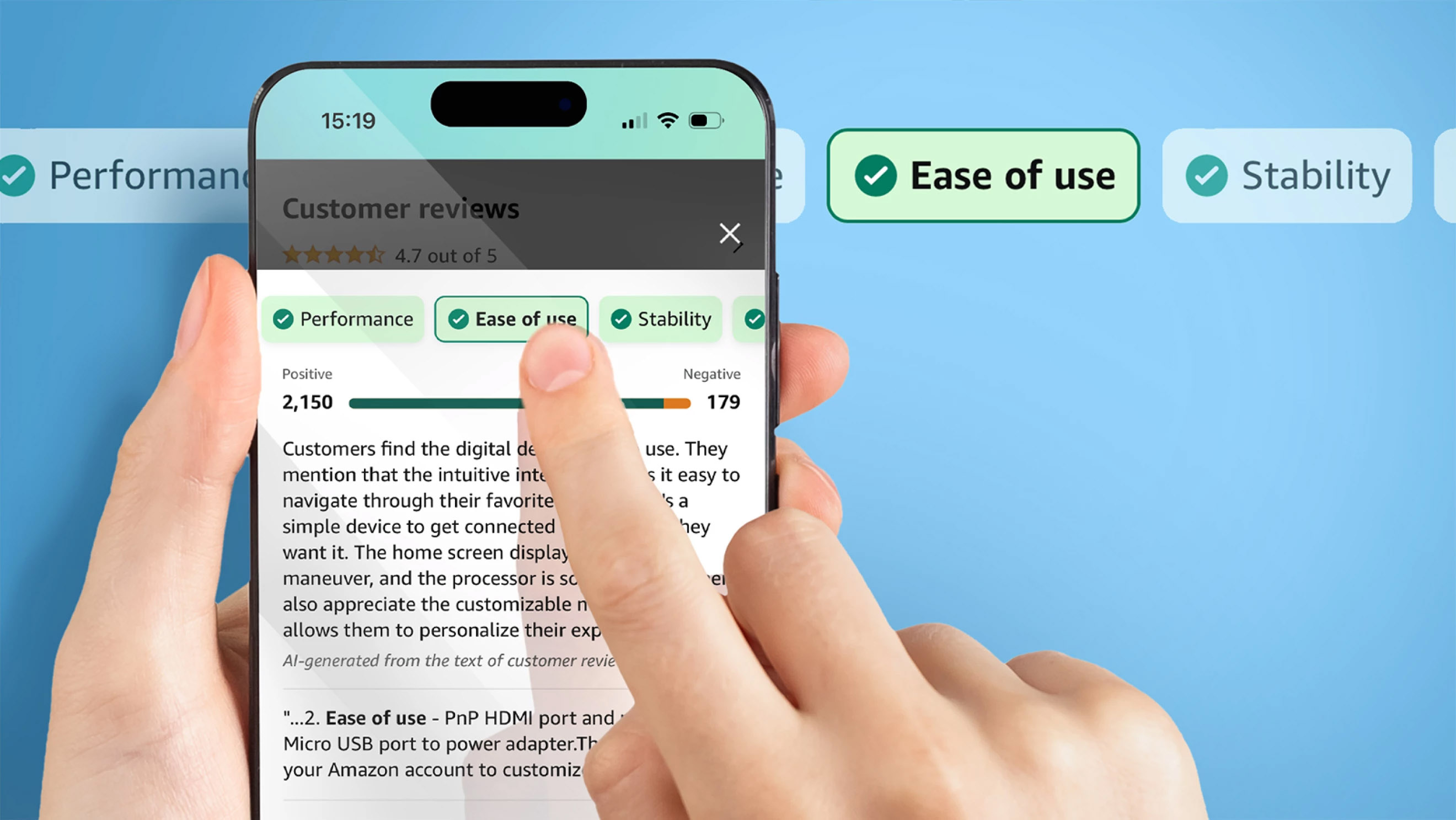

But just because we're seeing some successes doesn't mean that these generative AI systems have what it takes to make it in other important areas. Take Amazon's new AI-generated reviews and those seen on other websites like Newegg.

My colleague, Jacob Siegal, has already talked about just how bad Newegg's AI reviews are, but the potential is there for Amazon's to be just as bad once that system launches, too. Why, though? Well, a lot of it comes down to nuance. While generative AI like ChatGPT (and systems like it) can respond to prompts, it's also very easy to tell AI writing from human writing, and a lot of that comes down to the nuances of human writing.

See, when a human writes something, it has more emotion. There are more breaks that just don't feel perfect in the writing, and there are more opinions mixed in, too. This is the case even in more academic writing, as the way that researchers write their papers can often showcase how their opinion rests alongside the subject matter.

It's these nuances that make human writing feel, well, human. And when you start bringing AI into the mix, it just can't bring that same nuance and emotion to life within its writing. Reviews are a huge subject of opinion, and it isn't uncommon to read reviews on hugely popular items and see an array of different opinions showcased. So, when you bring generative AI reviews into the mix, you will miss a lot of that nuance.

Newegg's AI reviews showcase this lack of nuance really well. Because while these generative AI reviews aren't replacing human reviews, they are trying to take from them and summarize them, often missing the finer points of the writing. This can lead to pros and cons being mixed up, or the summary just not even properly showcasing the strengths and weaknesses of the product as it should.

So why are websites turning to these generative AI reviews if they can't be trusted? Well, the hope is that one day they will be trustworthy and actually recapture the nuance of human-written reviews. Whether that day comes anytime soon is really what remains to be seen, but everything these companies are doing is in the name of creating better consumer experiences while also feeding into the latest tech trends to stay "in the loop," so to speak.

Will it pan out in the end? That's not a question I can answer at the moment. But until generative AI systems like ChatGPT become more reliable, I'd recommend steering clear of trusting any kind of AI-generated reviews you see on websites, even if it does make it a little easier to comb through the mass of reviews on that shiny new thing you're thinking of buying.

Ultimately, the work spent reading real, human reviews will help you make a better and more informed decision.