AI Breakthrough That Could Threaten Humanity Might Have Been Key To Sam Altman's Firing

Almost a week has passed since the OpenAI board fired CEO Sam Altman without explaining its actions. By Tuesday, the board reinstated Altman and appointed a new board to oversee the OpenAI operations. An investigation into what happened was also promised, something I believe all ChatGPT users deserve. We're talking about a company developing an incredibly exciting resource, AI. But also one that could eradicate humanity. Or so some people fear.

Theories were running rampant in the short period between Altman's ouster and return, with some speculating that OpenAI has developed an incredibly strong GPT-5 model. Or that OpenAI had reached AGI, artificial general intelligence that could operate just as good as humans. That the board was simply doing its job, protecting the world against the irresponsible development of AI.

It turns out the guesses and memes weren't too far off. We're not on the verge of dealing with dangerous AI, but a new report says that OpenAI delivered a massive breakthrough in the days preceding Altman's firing.

The new algorithm (Q* or Q-Star) could threaten humanity, according to a letter unnamed OpenAI researchers sent to the board. The letter and the Q-Star algorithm might have been key developments that led to the firing of Altman.

The letter

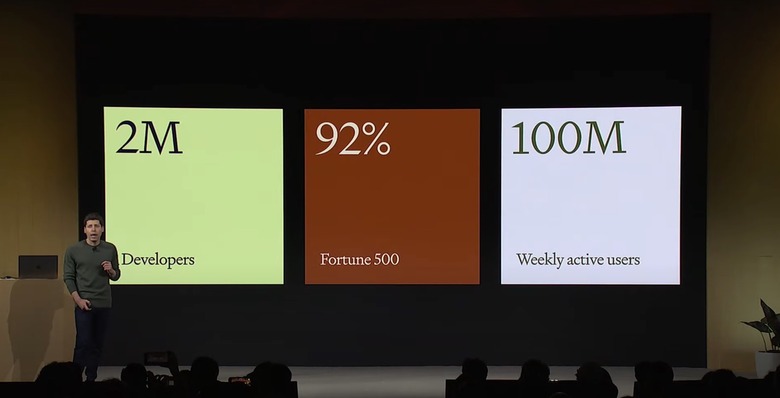

According to Reuters, which has not seen the letter, the document was one factor. There's apparently a longer list of grievances that convinced the board to fire Altman. The board worried about the company's fast pace of commercializing ChatGPT advances before understanding the consequences.

OpenAI declined to comment to Reuters, but the company acknowledged project Q-Star in a message to staffers and the letter to the board. Mira Murati, who was the first interim CEO the board appointment after letting Altman go, apparently alerted the staff on the Q-Star news that was about to break.

It's too early to tell whether Q-Star is AGI, and OpenAI was busy with the CEO drama rather than making public announcements. And the company might not want to announce such innovation anytime soon, especially if caution is needed.

What can Q-Star do?

Reuters reports that the Q-Star moder was able to do something generative AI can't do reliably, and that's solve math problems:

Given vast computing resources, the new model was able to solve certain mathematical problems, the person said on condition of anonymity because the individual was not authorized to speak on behalf of the company. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*'s future success, the source said.

Reuters further reports that researchers consider math, where only one answer is correct, to be a big milestone for generative AI. Current programs like AI are good at writing and language translation as they predict the next word. Answers to the same prompt might vary as a result. But with math, there can only be an answer.

Once math is conquered, AI will have greater reasoning capabilities resembling human intelligence. After that, AI could work on novel scientific research.

What's the danger

The letter flagged the potential danger of Q-Star, although it's unclear what the safety concerns are. Generally speaking, researchers worry that the dawn of AI might also lead to the demise of the human species.

As more intelligent AI comes along, it might decide that destroying the human species might better serve its interests. It sounds like the premise of Terminator and Matrix, but it's one fear AI researchers have.

It's unclear whether Q-Star is the key development that could lead there. But we'll only know after the fact.

Reuters also reports that researchers have flagged the work of a new "AI scientist" team:

Researchers have also flagged work by an "AI scientist" team, the existence of which multiple sources confirmed. The group, formed by combining earlier "Code Gen" and "Math Gen" teams, was exploring how to optimize existing AI models to improve their reasoning and eventually perform scientific work, one of the people said.

While OpenAI is yet to confirm this rumored innovation, Sam Altman did tease a big breakthrough in the days preceding his ouster.

"Four times now in the history of OpenAI, the most recent time was just in the last couple weeks, I've gotten to be in the room, when we sort of push the veil of ignorance back and the frontier of discovery forward, and getting to do that is the professional honor of a lifetime," the CEO said at the Asia-Pacific Economic Cooperation summit. A day later, he was fired.

As I said before, the world needs to know what happened here. And while Altman might be back at OpenAI, the drama isn't over. OpenAI might not be ready to talk about Q-Star publicly. But those people who are unhappy with the turn of events will surely share more details.