6 New Project Astra Features Google Announced At I/O 2025

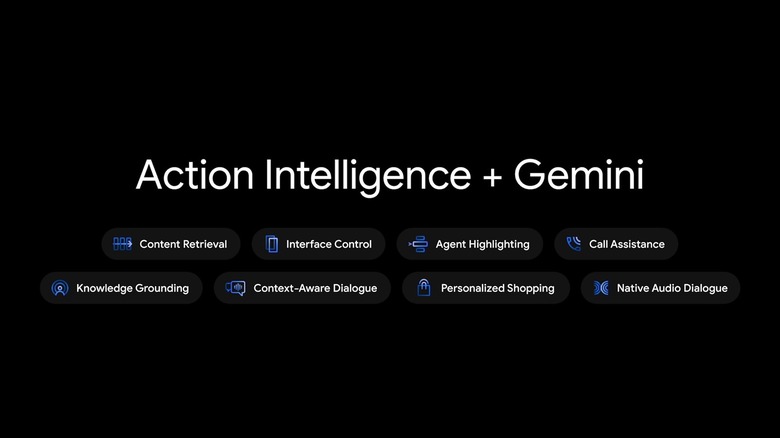

Google unveiled Project Astra last year at I/O, showing off nascent AI technology that allows mobile users to talk to Google's AI in real time using conversational language. You might ask the AI to find stuff on the web for you or share your camera and screen so it can see what you see and provide guidance.

Some of those features are available via Gemini Live, Google's AI-powered assistant for Android and iPhone. But Google isn't stopping there. It announced several new Project Astra tricks coming to Gemini Live soon, in addition to making its best feature free for Android and iPhone users.

Camera and screen-sharing go free

Having the AI look at what you see in real life or on your screen is a key function for any AI-powered assistant. Google wants Gemini to be more powerful than ever, so it's making the camera and screen-sharing features in Gemini Live free for all Android and iPhone users. The feature will start rolling out on Tuesday.

Gemini Live will also integrate with more Google apps soon, starting with Google Maps, Calendar, Tasks, and Keep.

Google demoed new Project Astra capabilities at I/O 2025 by showing a video of Gemini Live helping with everyday activities, like fixing a bike.

Finding manuals online and scrolling for information

In the video, the user asks Project Astra to look for the manual of the bike he's repairing. The AI browses the web, finds the document, and asks what the user wants to see next.

The user then tells Project Astra to scroll the document until it finds a section about brakes. The Android phone's screen shows Project Astra doing just that and finding the information.

This kind of agentic behavior suggests Project Astra will be able to access specific information online, even within documents.

Find the right YouTube clip

The user then asks Project Astra to find a YouTube video that shows how to deal with a stripped screw. Gemini delivers.

Look for information online while looking at your camera

The chat continues with the user asking Project Astra to find information in Gmail about the hex nut size he needs, while showing the AI an image of the available parts in his garage.

Project Astra surfaces the information and highlights the correct part in the live video feed. It's a mind-blowing feature to have at your fingertips.

Making calls on your behalf in the background

Next, the user asks Project Astra to find the nearest bike shop and call them to ask about a specific part.

The AI doesn't respond immediately, since the call involves a third party. But Project Astra tells the user it will follow up with the info once it has it.

The user keeps talking to Project Astra while the AI handles the call in the background.

Once the call is done, Project Astra provides the needed info while continuing to manage other tasks in parallel.

Handling multiple speakers without losing focus

While Project Astra is on the phone with the bike shop, the user asks a follow-up question about the manual. At the same time, someone else asks the user if they want lunch.

Project Astra pauses but keeps track of everything. Once the user replies to the lunch question, the AI resumes the conversation about the manual without missing a beat.

Context-aware online shopping

At the end of the clip, the user asks Project Astra to find dog baskets for his bike. The AI surfaces suggestions that would fit his dog, clearly recognizing the pet from Google Photos or past interactions.

Project Astra can also make purchases, likely through Project Mariner. While we don't see this in action, when the AI confirms the bike shop has the needed tension screws, it offers to place a pickup order.

These new Project Astra features won't roll out immediately. Google is collecting feedback from trusted users first. Once ready, the features will be available on mobile devices and Android XR wearables.

As a longtime ChatGPT user, I can already say I envy these capabilities. Hopefully, OpenAI is working on something similar.

UPDATE: A previous version of the story said the brand-new Project Astra features above were Gemini Live features. Google has clarified to BGR that it's working on advancing new Project Astra ideas to Gemini Live, but there's no confirmed roadmap at this point.