ChatGPT's Memory Feature Is Rolling Out Widely, But I Have One Big Reason To Worry About It

OpenAI announced a big upgrade for ChatGPT a few months ago. The chatbot would be able to retain memories from your conversations with it. This happens in two ways: You can tell ChatGPT to remember specific things about yourself or let it pick up details from chats and remember them later.

The ChatGPT conversation memory feature builds on the custom instructions that OpenAI previously enabled in ChatGPT. The latter lets you instruct ChatGPT on how to answer your prompts and also offers the chatbot context about yourself.

But the new conversation memory is a much bigger upgrade and another building block towards ChatGPT becoming a personal AI assistant. I'm dreaming of a personal AI chatbot that would remember things about me to make all interactions easier.

But, just as OpenAI announced that conversation memory is rolling out widely, I have one big reason to worry about it: Privacy.

Specifically, the memory feature will not be available in two big markets: Europe and Korea. Remember that the EU is much tougher on tech companies regarding privacy. That's why OpenAI and other companies took their time rolling out AI features in the region. And probably why Europe is not getting ChatGPT memory support just yet.

I already explained how to use the memory feature in ChatGPT back in February when OpenAI started testing it. I'm a ChatGPT Plus user, but I didn't get access to it at the time. It now makes sense, as OpenAI isn't bringing the feature to Europe, where I'm based.

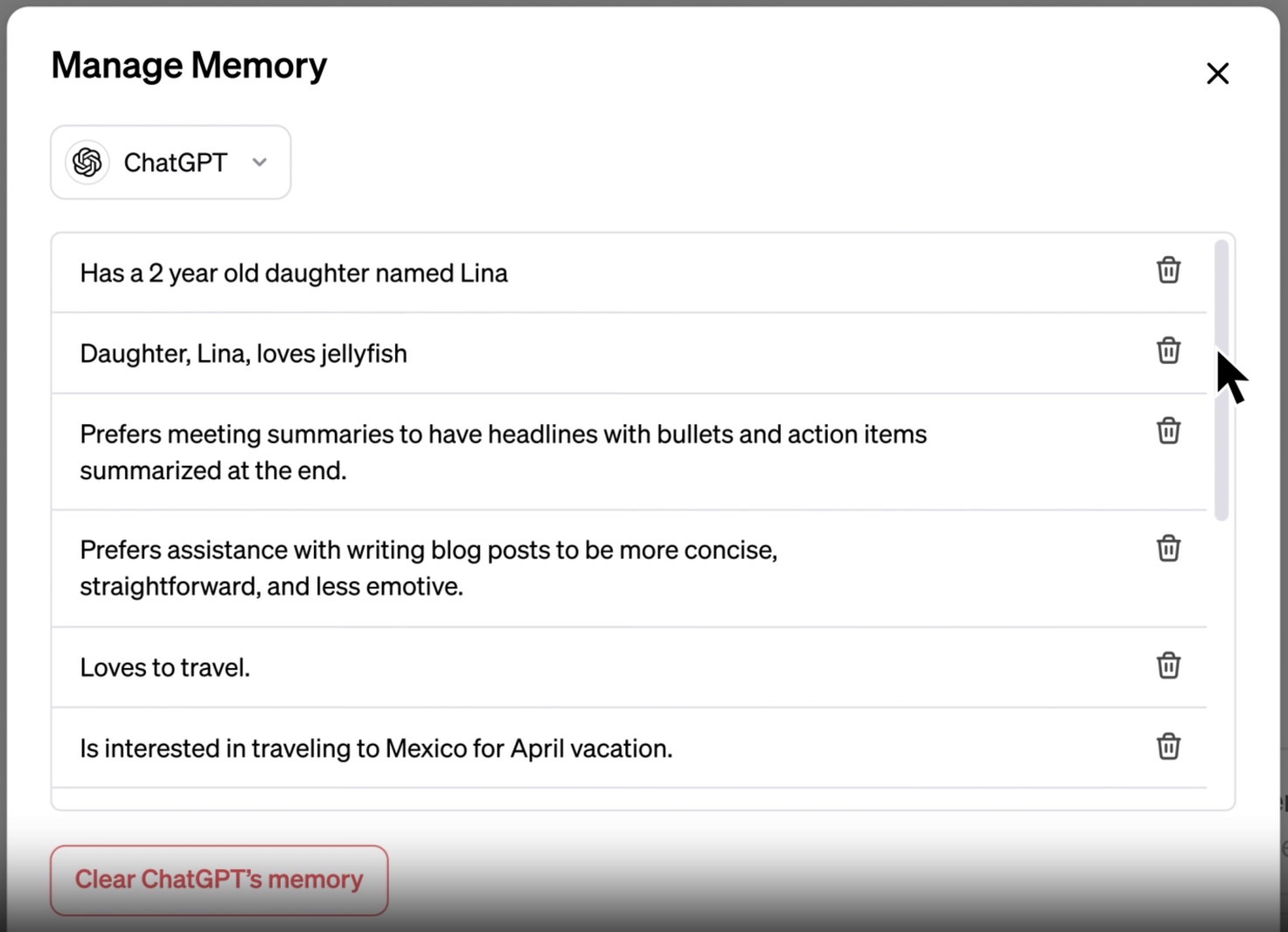

Here are a few ways you can give ChatGPT memories, according to OpenAI:

You've explained that you prefer meeting notes to have headlines, bullets and action items summarized at the bottom. ChatGPT remembers this and recaps meetings this way.

You've told ChatGPT you own a neighborhood coffee shop. When brainstorming messaging for a social post celebrating a new location, ChatGPT knows where to start.

You mention that you have a toddler and that she loves jellyfish. When you ask ChatGPT to help create her birthday card, it suggests a jellyfish wearing a party hat.

As a kindergarten teacher with 25 students, you prefer 50-minute lessons with follow-up activities. ChatGPT remembers this when helping you create lesson plans.

This is a great start, of course, and things will evolve from there. Both when it comes to what ChatGPT can remember, and how you teach it things about you.

In an update to the blog post published in February, OpenAI announced that ChatGPT will also be able to update memories and notify you about it:

Update: Memory is now available to all ChatGPT Plus users except those in Europe or Korea. Based on feedback from our earlier test, ChatGPT now lets you know when memories are updated. We've also made it easier to access all memories when updates occur—hover over "Memory updated," then click "Manage memories" to review everything ChatGPT has picked up from your conversations and forget any unwanted memories. You can still access memories at any time in settings.

The same update says that paying ChatGPT subscribers from Europe and Korea will not get the memory feature. There's no explanation for it, but the privacy aspect is the only one that makes sense to me right now.

I'll remind you that ChatGPT prompts are used to train the chatbot. You can opt out but risk losing your ChatGPT history if you don't do it correctly. It's unclear to me how OpenAI handles the privacy of memories. OpenAI said in February that the new memory feature will impact its privacy and safety standards:

Memory brings additional privacy and safety considerations, such as what type of information should be remembered and how it's used. We're taking steps to assess and mitigate biases, and steer ChatGPT away from proactively remembering sensitive information, like your health details – unless you explicitly ask it to.

This is still not detailed enough for my taste. I am a ChatGPT Plus user who wouldn't mind having the chatbot remember things about me. It's the only way we'll get to personal AI. But I want to know exactly what happens to my data after that. I don't want ChatGPT to use my memories, whether superficial or sensitive, for training.

ChatGPT memory privacy is a lot better for Team and Enterprise users:

As with any ChatGPT feature, you're in control of your organization's data. Memories and any other information on your workspace are excluded from training our models. Users have control on how and when their memories are used in chats. In addition, Enterprise account owners can turn memory off for their organization at any time.

I hope the same protections will come to ChatGPT Plus users who want to take advantage of the memory features.

Put differently, I wouldn't rely too much on the memory feature just yet, even if it were available to me in Europe. Instead, I'd wait for more updates from OpenAI on how my data is handled.