Scientists Built A Robot That Taught Itself What It Is

AI researchers who develop machine learning algorithms have demonstrated that telling a machine to teach itself how to do a certain task is a pretty great strategy. Trial and error over countless attempts often results in a machine or program that is highly skilled.

A new research effort took that concept to an entirely different level by not only tasking a robot with completing an objective, but also forcing it to learn about itself in the process. The robot, which had to figure out that it is a robot, didn't even know what its own body looked like when the tests began, but that quickly changed.

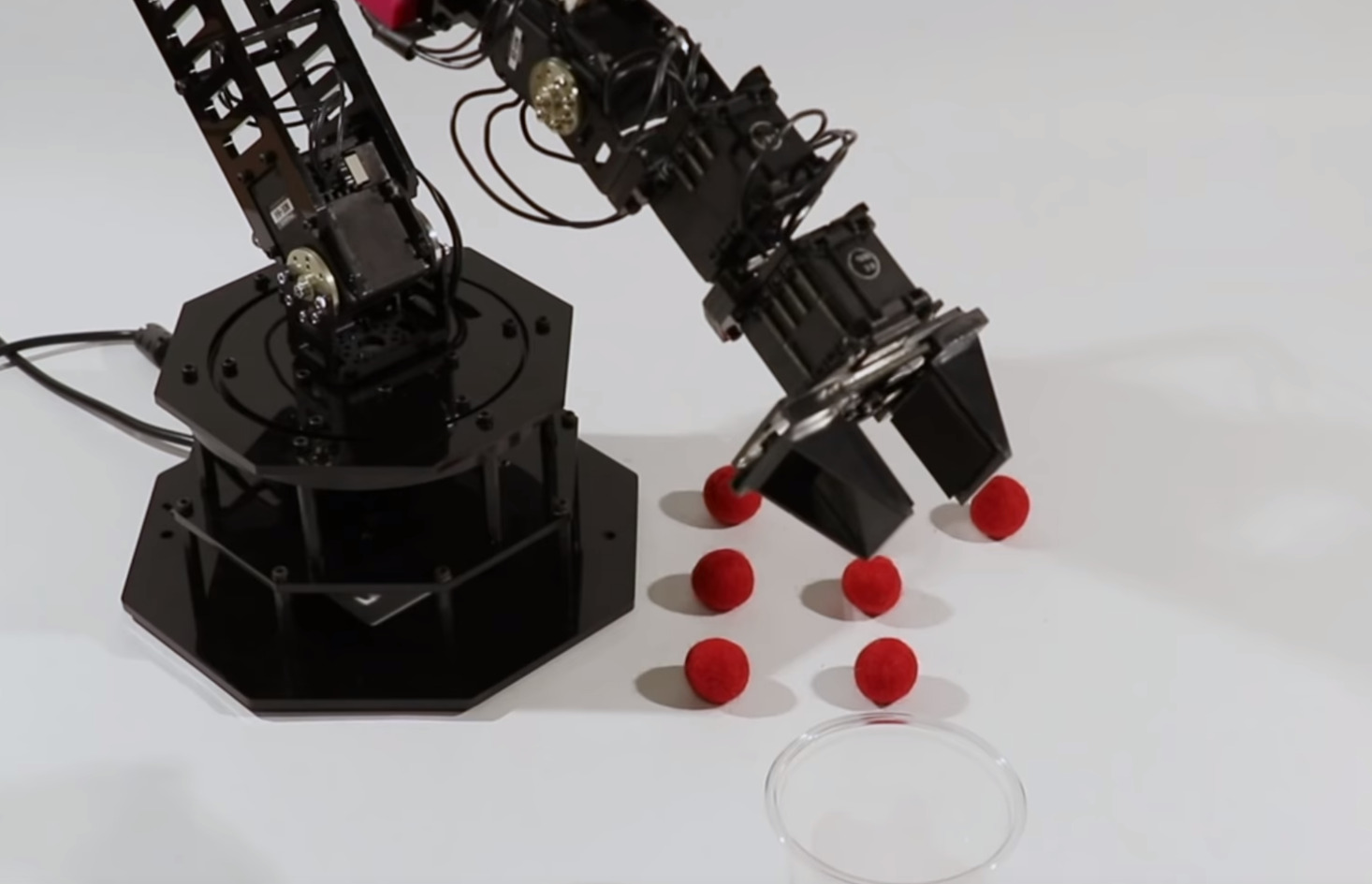

In the paper, which was published in Science Robotics, the scientists explain how the robot's first big hurdle was figuring out how it was shaped. The robot consisted of a long arm with several joints, and at first the robot just flailed randomly as it tried to determine its own capabilities and range of motion.

Eventually, the robot had built up enough knowledge to accurately move its mechanical "hand," pick up objects, and place them in a container with 100% accuracy, all without ever being told how to actually do so.

Initially, the robot's skills were aided by its ability to measure its own movements, which would be like a human being able to see where their hand is going as they move it. When that ability was removed, the robot had a tougher time completing the task, but still managed an impressive 44% accuracy when picking up and placing the objects. The researchers compare this to the difficulty of a human finding and picking up a glass of water while blindfolded.

This may be a far cry from a robot that is actually self aware, but it's an interesting experiment that shows how algorithms are capable of giving a robot the ability to learn about itself as well as its surroundings.