Apple Mixed Reality Glasses Might Feature Eye Tracking And Iris Recognition Tech

Apple is widely rumored to be working on several types of smart glasses that will be launched in the coming years. The mixed reality (MR) device would be able to deliver virtual (VR) and augmented reality (AR) features, some rumors said. Additionally, Apple is developing a more nimble AR headset that would look more like current smart glasses designs than bulky VR headsets.

An increasing number of reports have detailed Apple's VR, and AR glasses plans already, with a few of them coming from Ming-Chi Kuo, an analyst whose connections with Apple's complex supply chain allowed him to make several accurate predictions about unreleased Apple products. Kuo offered various details about Apple's smart glasses projects in the previous weeks. His newest report provides additional clues about two features that might equip Apple's mixed reality glasses — for avoiding confusion with the AR glasses, we're going to refer to them as VR glasses/headset.

According to MacRumors, the analyst penned a new note to investors in which he addressed eye tracking and iris scanning technology that Apple might be developing for the VR headset.

Apple already has patents detailing technology that can track eye movement. A previous report said that the VR glasses would feature several cameras, including eye trackers. Tracking eye movements would allow the software to dynamically adapt the resolution of the images on the screen so that only the part in focus is showed at the maximum 8K resolution. Everything placed in peripheral vision would be displayed at a lower resolution to conserve battery life. As the user moves the eyes, the image on the screen would adapt accordingly.

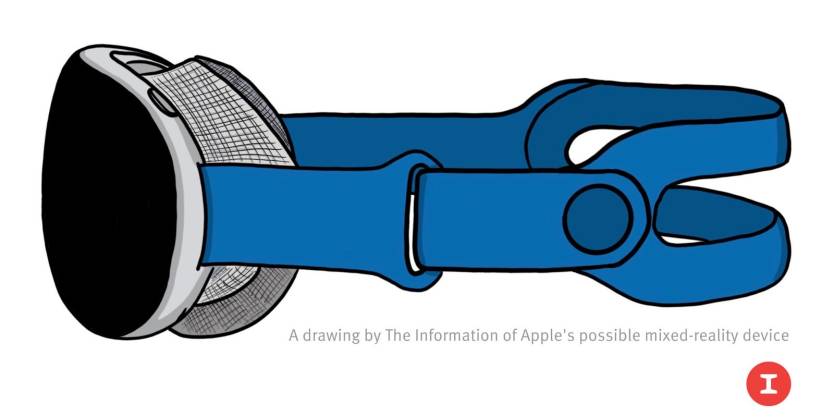

A render of the purported design of Apple's mixed-reality glasses.

Kuo's new note offers more details on how the system works:

Apple's eye tracking system includes a transmitter and a receiver. The transmitting end provides one or several different wavelengths of invisible light, and the receiving end detects the change of the invisible light reflected by the eyeball, and judges the eyeball movement based on the change.

The analyst said that most head-mounted displays are operated by handheld controllers that can't provide a smooth experience. Tracking eye movement could help. This could allow Apple to create new user experiences that involve having the user control the glasses with the eyes. He also mentioned the "reduced computational burden" in the form of reducing resolution in the parts of the image where the user isn't looking.

Kuo also said that based on hardware specifications, an iris recognition feature should be possible on the glasses. Kuo says that iris recognition could be used for a "more intuitive Apple Pay method."

Iris recognition could allow smart glasses to authenticate users and ensure that they can access apps and features tied to a particular Apple account. Also, unlocking smart glasses with iris recognition could allow the user to unlock other Apple devices nearby. However, this is only speculation based on how Apple's current authentication protocols work and on recent patents from the company.

Kuo said previously that the VR device could be launched in mid-2022, while the AR glasses would only arrive in 2025.

Apple isn't the only company developing smart glasses. Facebook unveiled wrist wearables that can "read the mind" of users. The gadgets would pick up nervous impulses and translate them into actions on the screen. That includes turning any surface into a keyboard that's visible only to the wearer of the glasses. Facebook said it didn't have a timeline for launching commercial products that would support these features.