When Google unveiled the Pixel 2 series last fall, the company made a big deal about its camera tech and stressed out the fact that it doesn’t need two cameras to offer users a portrait mode similar to what Apple introduced a year earlier.

Portrait mode on iPhone Plus and iPhone X models require the use of two cameras. Google’s Pixel 2 phones, meanwhile, do it with just one single lens. That’s because Google is using AI software to generate portrait modes, even though the end result might not be similar to Apple’s.

Google is now ready to make part of that technology available to anyone manufacturing phones out there. Google isn’t open-sourcing the entire Pixel 2 camera tech or the actual portrait mode on these phones, just the AI tool that makes it possible.

The company announced the move in a blog post earlier this week, but nobody will blame you if you missed it. It’s published on the research blog and has an unappealing title: Semantic Image Segmentation with DeepLab in Tensorflow

— unless you’re into AI, in which case you’ve probably seen it already.

What Google is open-sourcing is the DeepLab-v3+ code, an image segmentation tool that’s built using neural networks. Google has been employing machine learning for a few years now, looking to improve the quality and smarts of its Camera and Google Photos apps.

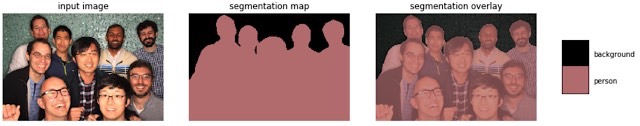

The image segmentation part is what’s interesting here, and what allows the Pixel 2 to take single-cam portrait mode shots. The AI recognizes objects in images in real-time, which makes possible the depth-of-field effect in pictures:

Semantic image segmentation, the task of assigning a semantic label, such as “road”, “sky”, “person”, “dog”, to every pixel in an image enables numerous new applications, such as the synthetic shallow depth-of-field effect shipped in the portrait mode of the Pixel 2 and Pixel 2 XL smartphones and mobile real-time video segmentation. Assigning these semantic labels requires pinpointing the outline of objects, and thus imposes much stricter localization accuracy requirements than other visual entity recognition tasks such as image-level classification or bounding box-level detection.

App developers and device makers can now use these Google innovations to create their own Pixel 2-like camera bokeh effects in devices that lack a secondary camera. That doesn’t mean other Android devices are about to replicate the entire Pixel 2 experience or the Pixel 2’s portrait mode, as Google is far from actually sharing those secrets. But this semantic image segmentation trick is a step in that direction.